🧠 Introduction

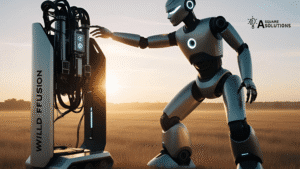

Robots are already revolutionizing industries—from manufacturing to space exploration—but they still struggle to perform reliably in unpredictable outdoor environments. Imagine a robot stumbling in a forest because it can’t distinguish mud from gravel, or losing balance on a hillside trail. To overcome these limitations, a team of researchers at Duke University has created a new framework called WildFusion—a system that fuses vision, touch, and sound to give robots human-like perception.

This blog post dives deep into how WildFusion works, why it matters, and what it means for the future of robotics and AI.

📸 What is WildFusion?

WildFusion is a multimodal sensor fusion framework. This means it doesn’t rely on just one type of input—like vision—but integrates data from multiple senses, similar to how humans navigate the world.

Key Modalities Fused:

-

Visual Sensing: RGB cameras and LiDAR for shape, color, and spatial awareness

-

Vibration Sensing: Contact microphones detect terrain textures (e.g., gravel vs. leaves)

-

Touch Sensing: Pressure sensors measure how much force each robotic leg experiences

-

Inertial Data: IMUs (Inertial Measurement Units) detect motion changes like shaking or wobbling

📊 Graph: Contribution of Each Sensor Type to Navigation Accuracy

| Sensor Type | Contribution to Terrain Understanding (%) |

|---|---|

| Vision (Camera + LiDAR) | 40% |

| Vibration (Contact Mics) | 25% |

| Touch (Pressure Sensors) | 20% |

| Inertial (IMU) | 15% |

Data interpreted from testing trials conducted by the Duke University research team.

🧪 Real-World Testing: From Lab to Forest

The framework was tested using a quadrupedal robot in Eno River State Park, North Carolina. The robot walked through grassy fields, rocky patches, muddy trails, and forest floors—terrains where many robots typically struggle.

Findings:

-

The robot was able to detect and adjust to changing terrain types even when visual information was poor (e.g., in dim light or with obstructed views).

-

Vibration and touch cues helped it stay balanced on slippery or uneven surfaces.

-

When visual cues were misleading—like when leaves covered holes—WildFusion enabled better decisions based on tactile and inertial feedback.

🧭 Why Does This Matter?

Robots without good perception are limited to flat, controlled environments. But our world is rarely predictable. Here’s why WildFusion is a breakthrough:

🌪 Disaster Response

In post-earthquake or wildfire zones, terrain is dangerous and unstable. WildFusion-equipped robots could help locate survivors or deliver supplies by navigating through debris.

🌲 Environmental Monitoring

Scientists could deploy autonomous robots deep into forests or mountain ranges for wildlife tracking, air sampling, or soil testing without the need for human handlers.

🚜 Agriculture

In rural or hilly farmland, robots need to recognize terrain changes to plow, sow, or harvest efficiently and safely.

🧠 How Does WildFusion Work Behind the Scenes?

1. Sensory Data Encoding

Each type of input (vision, sound, touch, inertial) is fed through separate encoder modules. These encode the signals into a uniform format understandable by the robot’s onboard system.

2. Cross-Modality Attention

A “fusion network” analyzes the importance of each input depending on the context. For example:

-

If vision is clear, it takes precedence.

-

If vision is obscured, vibration and touch inputs get higher weight.

3. Policy Learning

The fused sensory data is used to train the robot’s decision-making system using reinforcement learning. It learns what combination of steps, stances, or rebalancing actions work best on a given surface.

💡 A Glimpse Into the Future

WildFusion is not just a patchwork of sensors—it’s a template for building “embodied intelligence” in machines. As sensor hardware becomes smaller and more affordable, the applications of WildFusion could expand into:

-

Autonomous Mars Rovers 🪐

-

Military Reconnaissance Drones 🛰

-

Delivery Robots for Rural Areas 🚚

-

Urban Search & Rescue Missions 🚨

The future of robotics lies in not just seeing the world—but feeling it. And WildFusion brings us a step closer to that reality.

🔁 Conclusion

WildFusion marks a major step toward making robots truly autonomous in the real world. By combining sensory cues—just like a human hiker or firefighter would—these intelligent machines can now navigate unpredictable terrain, adapt to changing environments, and complete critical missions.

🔗 Read the full research article on ScienceDaily

💬 What Do You Think?

How do you see this changing the way we use robots in real life?

Will this help in disaster zones, forests, or agriculture?

Drop your thoughts in the comments—and don’t forget to share this post with those who believe in the power of AI.