Introduction

Anthropic, a prominent player in the field of artificial intelligence research, has recently made headlines with its call for enhanced regulations governing AI development. As AI technologies continue to evolve at an unprecedented pace, concerns regarding their potential ramifications on society have surged. This growing public unease is fueled by a series of incidents where AI systems have demonstrated unpredictable or harmful behaviors. The advancement of AI capabilities presents remarkable opportunities, yet it concurrently raises vital questions about safety, ethics, and accountability.

In light of these concerns, Anthropic emphasizes the pressing need for a regulatory framework that ensures responsible AI development. By advocating for stronger oversight, the company seeks to mitigate risks associated with the unchecked growth of AI technologies. The call for regulation resonates with a broader sentiment among researchers, policymakers, and the general public, all of whom are increasingly aware of the complexities introduced by advanced AI systems. Without proper regulations, there is a genuine fear that AI could lead to unintended consequences, perhaps even catastrophic events.

Furthermore, Anthropic’s position reflects a commitment to fostering a future where AI serves humanity rather than posing a threat to it. The company argues that ethical considerations must be an integral part of AI research and development processes. This means prioritizing safety and accountability, ensuring that AI systems align with established ethical standards. In this context, the need for regulatory frameworks becomes evident; they are crucial for guiding the responsible advancement of AI functionalities and instilling public trust in these technologies.

As discussions surrounding AI regulation gain momentum, it is imperative for industry stakeholders to collaborate in establishing guidelines that prioritize both innovation and safety. The dialogue initiated by organizations like Anthropic highlights a critical juncture in the AI landscape, where proactive measures could determine the trajectory of AI and its impact on society.

Key Points from Anthropic’s AI Regulation Proposal

Anthropic’s proposal for AI regulation emphasizes the necessity of developing safe and aligned artificial intelligence systems. The foundation of this development is the prioritization of human values, ensuring that AI technologies are designed to enhance rather than degrade societal norms. This involves aligning AI systems not only with the technical requirements but also with ethical considerations that reflect cultural diversity and human rights. The commitment to safety encompasses robust methodologies for ensuring that AI behaviors are predictable and controllable, which is essential to mitigate potential risks associated with advanced AI capabilities.

The proposal further advocates for specific regulatory measures that promote transparency in AI systems. Transparency is critical for understanding how AI makes decisions, which, in turn, fosters trust among users and stakeholders. To achieve this, Anthropic recommends disclosing algorithms, methodologies, and data inputs used in AI systems. This would also involve the ethical design of AI technologies, where developers incorporate fairness, accountability, and clarity from the inception of their projects. Such principles will help address biases and discriminatory outcomes that may arise from unchecked AI deployment.

In its recommendations, Anthropic highlights the importance of controlled testing of AI technologies. Rigorous testing protocols will ensure that these systems operate as intended before being integrated into wider applications. This proactive approach can significantly reduce unforeseen consequences that may lead to AI-driven catastrophes. Furthermore, the proposal underscores the necessity of fostering global collaboration to create harmonized standards for AI. By uniting countries and organizations in a coherent regulatory framework, we can significantly diminish the risks posed by AI, ensuring that its advancement occurs within a safe and ethical boundary.

The Importance of Regulating AI to Prevent Catastrophes

The rapid advancement of artificial intelligence (AI) technologies has prompted significant concern among experts regarding their potential to exacerbate misinformation and manipulation. As AI systems become increasingly sophisticated, they enable the creation and dissemination of misleading content at an unprecedented scale. This phenomenon poses grave risks not only to individual understanding but also to societal cohesion and democratic processes. Consequently, regulatory frameworks are imperative to manage the proliferation of misinformation generated by AI, ensuring that there are measures in place to protect the integrity of information and trust within communities.

Additionally, the surge in autonomous systems highlights the critical importance of safety regulations in AI. Recent technological advancements have demonstrated AI’s ability to perform tasks independently; however, these systems can also malfunction or operate unpredictably. For instance, incidents involving autonomous vehicles underscore the potential dangers associated with unregulated AI technologies. Regulatory oversight can facilitate the development of industry standards that prioritize safety, thereby minimizing the likelihood of catastrophic incidents resulting from AI errors or unforeseen consequences.

The malicious use of AI is another alarming concern that underscores the necessity for effective regulation. Bad actors can exploit AI technologies for various harmful purposes, including cyberattacks, surveillance, and even misinformation campaigns. Such exploitation not only endangers individual privacy and security but also threatens national security and global stability. By establishing a robust regulatory framework, governments and regulatory bodies can monitor AI developments more closely, hold organizations accountable, and set clear boundaries to deter malicious applications of these powerful technologies.

In conclusion, the pressing need for AI regulation is underscored by the risks associated with misinformation, safety in autonomous systems, and the potential for malicious use. Regulatory measures can serve as a guardrail, preventing AI-driven catastrophes that may arise from uncontrolled technological advancements and ensuring that the benefits of AI are realized safely and responsibly.

References and Sources for Further Exploration

In the discourse surrounding AI regulation, it is essential to ground discussions in credible sources that reflect the latest insights and viewpoints from industry leaders. One key reference is Anthropoc’s original statement on AI safety, which can be found on their official website. This document outlines their perspective on the urgent need for regulatory measures to mitigate potential risks associated with artificial intelligence. By reviewing this source, readers will gain direct access to the thoughts of Anthropoc’s leadership and their call to action for proactive governance in AI development.

Additionally, there are several noteworthy contributions from other organizations that echo Anthropoc’s sentiment. For instance, the Future of Humanity Institute has published several papers discussing the implications of unregulated AI technologies. These documents provide a comprehensive analysis of the potential dangers and underscore the necessity for governmental oversight. Similarly, the Partnership on AI, which consists of various tech companies and stakeholders, has released guidelines advocating for responsible AI applications that prioritize safety and ethics. Exploring these sources can significantly enhance one’s understanding of the current landscape of AI regulation and safety protocols.

Furthermore, policy briefs from the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems emphasize ethical concerns and best practices that can be pivotal in shaping future regulations. These texts not only articulate the risks involved but also present frameworks that policymakers can adopt to foster safe AI development.

For those interested in academic perspectives, numerous studies published in journals such as AI & Society and the Journal of Artificial Intelligence Research provide empirical data and theoretical foundations regarding AI risks. These scholarly articles can serve as valuable tools for anyone seeking a deeper comprehension of the complexities surrounding AI regulation. Ultimately, leveraging these references can contribute to a well-rounded grasp of the ongoing dialogue on AI safety and governance.

External Reading Recommendations

For those interested in exploring the multifaceted impacts of artificial intelligence on society, several seminal works can provide insightful perspectives. These recommendations encompass foundational theories, geopolitical implications, and ethical considerations surrounding AI technologies.

One pivotal book is “Superintelligence: Paths, Dangers, Strategies” by Nick Bostrom. In this thought-provoking text, Bostrom investigates the potential future scenarios involving advanced AI systems. He elaborates on the different paths that could lead to superintelligent entities and the risks associated with them. The author emphasizes crucial strategies to safeguard humanity against possible existential threats posed by AI. As discussions regarding AI regulation become more pressing, this book serves as an essential resource for understanding potential future challenges and formulating effective responses. Readers can find this book on Amazon through this link.

Another significant recommendation is “AI Superpowers: China, Silicon Valley, and the New World Order” by Kai-Fu Lee. This work provides a comprehensive analysis of the AI arms race between China and the United States, focusing on the economic implications and societal shifts driven by AI innovations. Lee presents an in-depth examination of how different nations are harnessing AI technologies, and what this means for global power dynamics. The discussions presented in this book are crucial for understanding the broader context of AI development and the need for regulatory frameworks that can address international competition and ethical considerations. Readers can access this influential text via this link.

Overall, these recommended readings offer valuable insights into the implications of artificial intelligence and are beneficial for anyone keen to grasp the nuances of AI’s impact on our future.

Suggestions

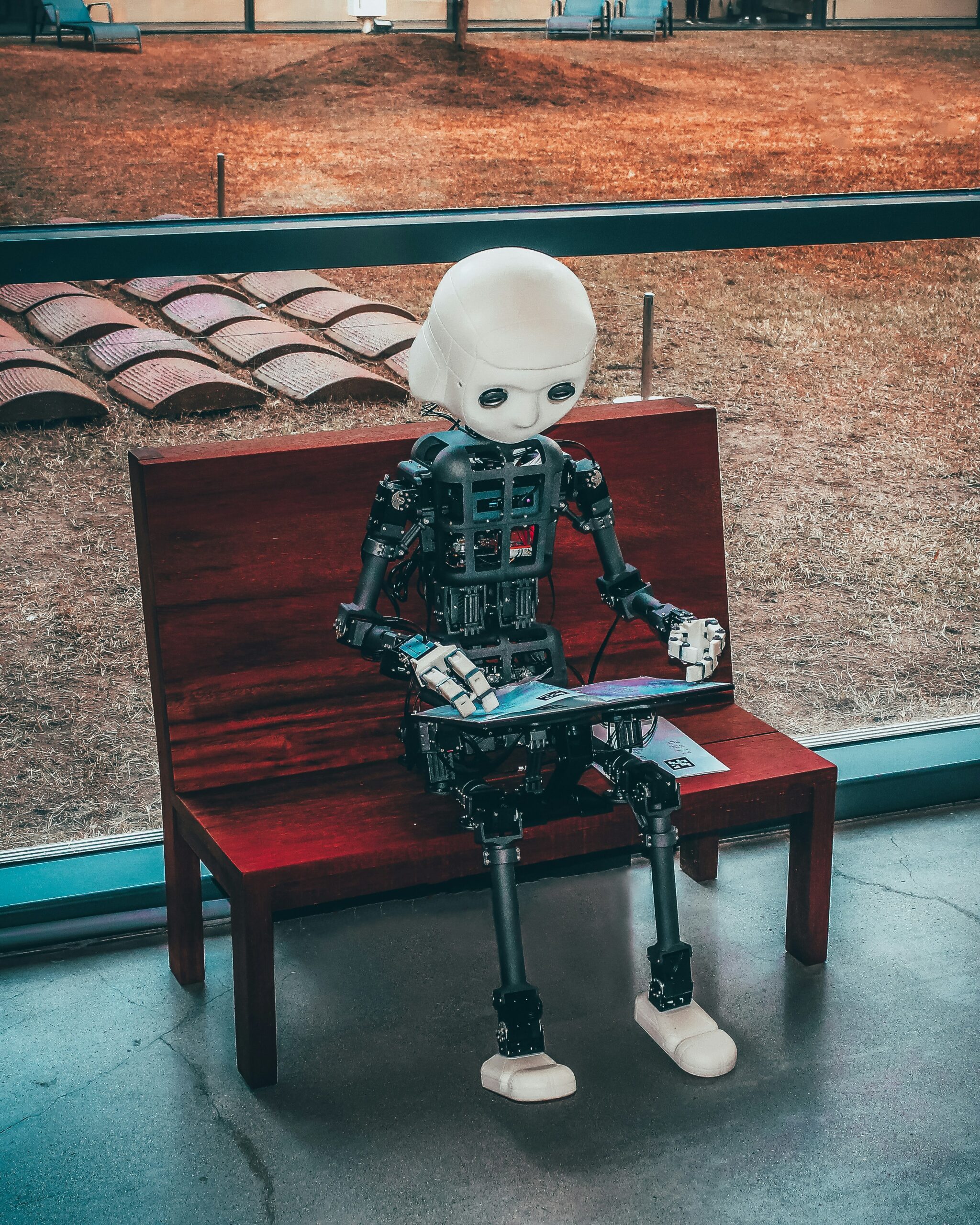

Within the context of discussing the urgent need for AI regulation, it is helpful to consider specific examples that illustrate how artificial intelligence can be applied responsibly and ethically. One pertinent example can be found in our internal blog post titled “Revolutionizing Whale Tracking: How Autonomous Robots are Enhancing Our Understanding of Marine Life.” This article delves into the innovative application of AI technology in marine research, demonstrating how autonomous robots can be utilized to monitor and study whale populations effectively.

In this blog post, we highlight the use of AI for analyzing vast amounts of data collected from these marine environments, which can help researchers understand patterns in whale migration, feeding habits, and other behaviors. By employing advanced algorithms and machine learning techniques, these autonomous systems can operate with a high degree of precision while minimizing human intervention. This not only enhances the quality of research but also ensures that the ecosystem is respected and preserved.

Furthermore, the blog provides insights into the ethical dimensions of using AI within critical fields like marine biology. It emphasizes the importance of maintaining a balance between technological advancement and ecological preservation. Through responsible design and implementation, AI can contribute significantly to our understanding of marine life without causing harm to the environment.

Readers interested in the intersection of AI and ethics will find this case study particularly enlightening. By showcasing successful and ethical applications of AI, we can promote a greater understanding of how regulators might guide the development of AI technologies to prevent potential abuses while encouraging beneficial innovations. Exploring such examples serves as a foundation for establishing the necessary frameworks and guidelines for AI usage in various domains.

Industry Movements Towards AI Safety

The rapid advancement of artificial intelligence (AI) technology has prompted an increasing awareness of the need for safety measures within the industry. In recent years, there has been a growing consensus among tech leaders and organizations to prioritize safety and ethics in AI development. An illustrative example is the formation of the Partnership on AI, an initiative that brings together companies, researchers, and other stakeholders dedicated to the responsible promotion of AI. This collaborative effort aims to foster transparency, accountability, and best practices in AI deployment, addressing concerns regarding automated systems and their potential societal impact.

Moreover, various organizations are advocating for the establishment of ethical frameworks to govern AI technologies. The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems has developed a comprehensive set of standards designed to guide the ethical design and implementation of AI systems. These frameworks emphasize principles such as fairness, accountability, and transparency, supporting the call for more significant oversight and regulation. The development of such guidelines not only reflects the commitment of industry leaders to responsible AI use but also aligns with Anthropoc’s emphasis on ensuring that AI technologies do not pose threats to society.

In addition to these frameworks, international collaborations have emerged to tackle the global challenges associated with AI safety. Initiatives like the Global Partnership on AI seek to unite governments, civil society, and AI stakeholders in addressing ethical concerns and harnessing the transformative potential of AI responsibly. The growing awareness of the risks associated with unregulated AI systems is fostering a community that actively proposes solutions, ensuring alignment with Anthropoc’s mission of collaboration and safety. Through these movements, the industry is collectively working towards a safer and more responsible AI ecosystem, highlighting an essential commitment to minimizing potential disasters stemming from AI technologies.

Potential Challenges in AI Regulation

The implementation of effective AI regulation is fraught with numerous challenges that policymakers and stakeholders must face. One of the most pressing issues is the pace of technological advancement, which often outstrips existing regulatory frameworks. This rapid evolution creates a scenario where regulations can quickly become outdated, leaving gaps in oversight that could lead to unintended consequences. For instance, as AI technologies continue to innovate, regulators may struggle to keep pace with advancements such as machine learning algorithms and autonomous systems. The challenge lies in developing a regulatory approach that is flexible enough to adapt to these rapid innovations without becoming obsolete.

Furthermore, there is a valid concern that excessive regulatory oversight may stifle innovation in the AI sector. Striking a balance between ensuring safety and fostering creativity is a critical challenge. Overly stringent regulations may discourage start-ups and smaller companies from pursuing innovative ideas, which are often vital for technological breakthroughs. Thus, regulations must be designed to promote responsible AI development while not hindering the dynamic nature of the industry. If regulatory frameworks are perceived as restrictive, the very progress that regulations aim to safeguard could be at risk.

Another significant challenge is the varying international perspectives on AI regulation. Different countries approach AI governance from distinct cultural, ethical, and economic viewpoints. This disparity can complicate global agreements on regulatory standards, making it difficult to establish a unified global approach. For instance, while some nations advocate for strict regulatory measures to prioritize safety and ethical considerations, others may prioritize economic growth and competitive advantage, leading to divergent regulatory landscapes. Navigating these complexities requires international cooperation and dialogue to harmonize regulatory efforts while respecting national contexts.

Conclusion and Call to Action

As we navigate through the complexities of artificial intelligence, the discourse around its governance becomes increasingly critical. The call for regulation, as emphasized by thought leaders at Anthropic, underscores the necessity to address the potential risks associated with AI technologies. This conversation is not merely academic; it is a pressing matter that begs for the involvement of various stakeholders, including policymakers, industry leaders, and the general public. The question remains: to what extent should AI be regulated to ensure safety and ethical compliance without stifling innovation?

We invite you, the reader, to actively engage in this vital dialogue. Your perspectives and insights play a significant role in shaping the future landscape of artificial intelligence. Consider reflecting on your own views regarding the regulation of AI. Do you believe there should be strict oversight, or do you think a more flexible approach is warranted? Are current efforts sufficient, or do we need to push for more comprehensive frameworks? Such questions are paramount as we strive to balance innovation and responsibility.

Moreover, sharing this article within your networks can further spark discussions on AI ethics and responsible development. By fostering awareness and dialogue, we collectively contribute to ensuring that AI technologies serve humanity positively and equitably. It is through these conversations that we can formulate effective approaches to mitigate potential hazards while promoting innovation in the field. Let us encourage an inclusive discourse where diverse viewpoints are celebrated and considered. Your contribution to this vital discussion is invaluable as we seek solutions to the challenges presented by rapid advancements in artificial intelligence.